Best Practices for Product and Solution Testing

Product and solution testing is often the first task to be ignored, shortened, or even just removed from scope when trying to complete a new software implementation on time and on budget. However, effective testing can save your organization significant time and resources over time by identifying and eliminating issues before they become large problems. That’s why it’s so important to complete all testing phases and tasks during the implementation.

In this webinar, RPI Senior Solutions Architect Pitts Pichetsurnthorn and Project Coordinator Sean LaBonte share our best practices and recommendations for effective testing across all types of implementations, upgrades, and solution optimizations.

Transcript

Pitts:

Welcome to Webinar Wednesdays with RPI, and thank you for joining the best practices for product and solution testing. This is part of our Webinar Wednesdays series here at RPI. We do webinars the first Wednesday of every month. Today we’re going over our testing best practices, and right after this we’ll go through our Brainware for Transcripts at 1:00.

Next month in September we have a couple of webinars planned. The big one is our 7.2.3 release of ImageNow or Perceptive Content. Sean will be going over that release and the learn mode updates as well at 11:00. Then at 1:00 p.m. we’ll be going over our new Yoga Link product, which is built to work with Yoga but it’s really a Chrome extension that’s going to allow you to link directly out of Chrome into your learn modes.

As I said, my name is Pitts. I’m a senior solution architect here at RPI. I have a little over six years of experience working on the Perceptive Content platform, extensive experience really within the clinical health care space, so I know a lot about the clinical products and the solutions that we use within that vertical. I love my dog Tofu.

Sean LaBonte:

My name is Sean LaBonte. I’m a project coordinator with RPI. I manage multiple projects including upgrades, health checks and new solution designs. A little bit more of a cat person, and on an unrelated note I am still Kansas City’s most eligible bachelor. On today’s agenda we’re going to cover a little bit about us, RPI Consultants, and go into introduction of testing methodology, the testing stages involved, and the management portion of those.

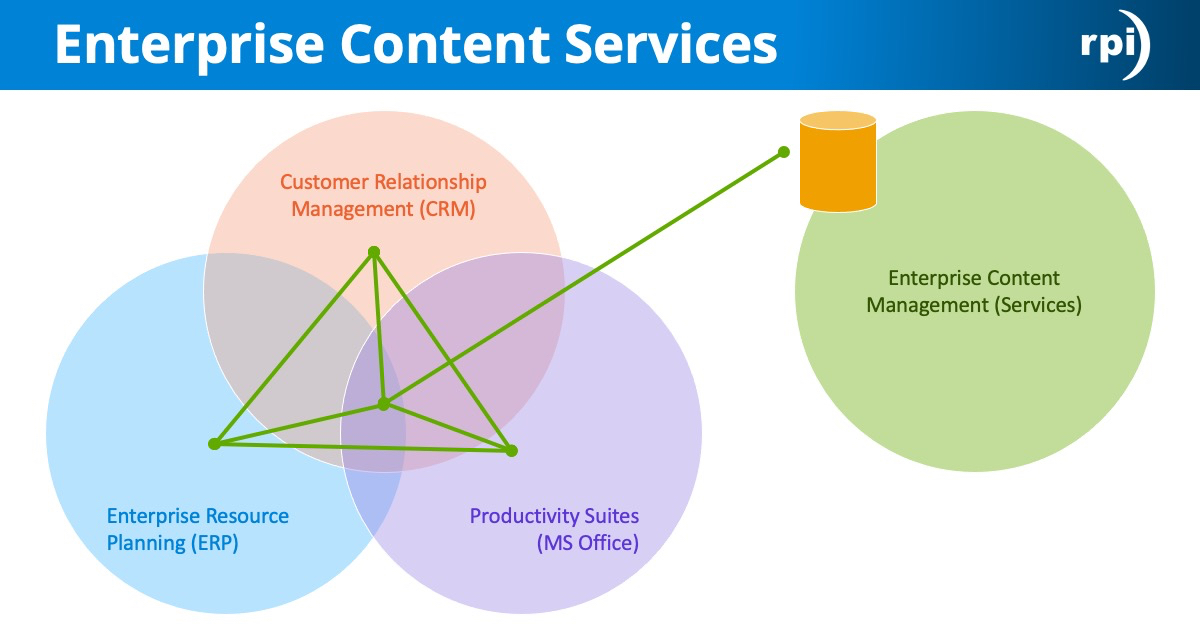

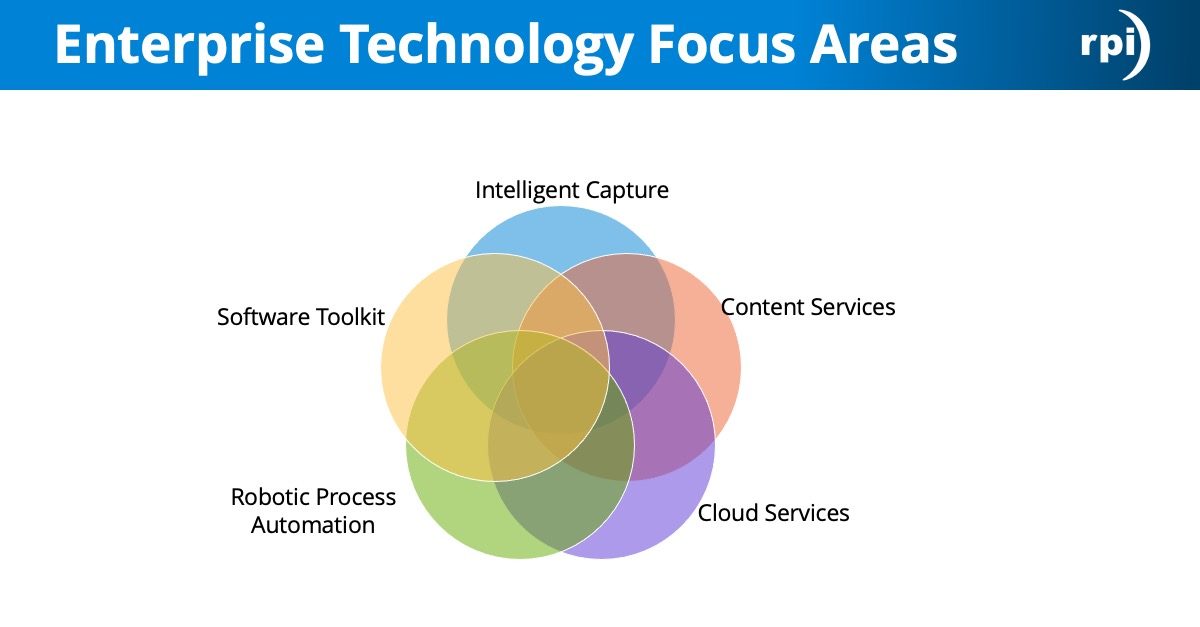

At the end we’ll have a summary and a section for Q&A. A little bit about us, we’re a comprehensive professional services organization with almost two decades of experience designing, implementing, and supporting ERP, ECM, and advanced data capture solutions. We have over 80 full-time consultants, project managers, and technical architects, several different locations for offices across the US.

We provide services including technical strategy, design enhancement, support in the realms of Perceptive, OnBase, Brainware, Kofax and Infor.

Pitts:

Perfect. Introduction to testing methodology. Testing is a vital part to every project we do here at RPI. While it’s often overlooked because it is a pretty tedious process, it’s very vital in ensuring that the solution and the products that we’re implementing go smoothly. What we wanted to go over here was really just a high-level overview of what we do as far as testing, and how most of our projects become successful because of the amount of testing that we put into it.

With testing, there’s four main principles here, four main objectives for every testing cycle that we do. The first one and probably the most important one is provide visibility for the stakeholders. The stakeholders that have made the decision to purchase the product or purchase the solution, to bring that into the day-to-day lives of your end users. They need to be up to speed with where everything is at, what are the flaws, where the status of the project’s at before we actually go into cut over and go live.

The other main point here is that we like to identify any failures or any bugs or defects, log those so that they could be corrected and addressed. We also want to ensure that the solution meets those functional requirements. We’ll go through a design. As we gather our business requirements, and then when we develop these components and these solutions we want to make sure all of that is going to address those functional requirements.

Then the last one here is that we want to make sure that there are no undesirable side effects as a part of introducing this new solution. Now, testing is not a one-size-fits-all, but we all have the same goals here with our testing efforts. How do we accomplish this? This is done through using a variety of resources. Again, these are users or tools. As we go through our testing cycles, we bring in different sets of users so that more people on the client side could be exposed to the new solution.

We also use a variety of tools to help with this, everything from issue management to test scripts and test cases. All of those tools are used to bring together this picture. We also go through different testing cycles, so different testing cycles have different objectives. Most testing cycles are done in an iterative fashion. This way we can be very thorough with what we’re trying to deliver.

Like I mentioned, there’s endless testing cycles, techniques, tactics. It differs depending on who you’re talking to, what kind of product you’re working with, or what kind of solution that we’re trying to implement as well. It’s not a one-size-fits-all. Again, the goals are pretty much the same. We do a lot of projects here at RPI, everything from a brand new implementation to an upgrade, to expansions on existing solutions.

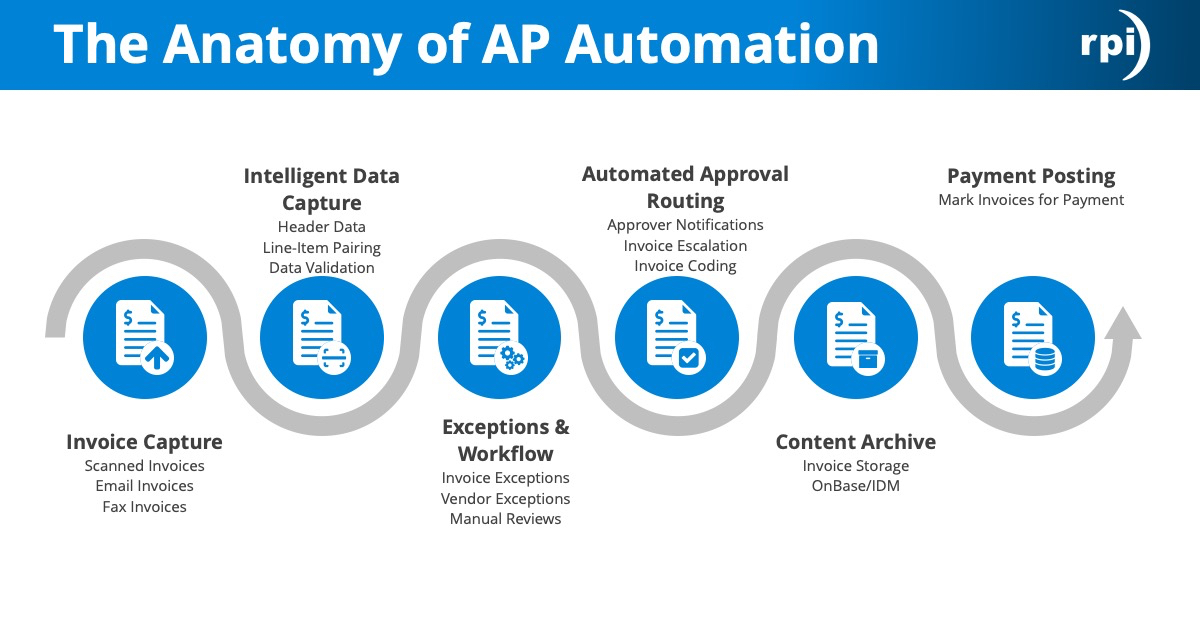

Depending on the project at hand, the testing might be a lot more extensive or it could be a lot more accelerated as well. What we have here, this is just testing in a project methodology. What we have up here is just our typical project methodology for most of the projects we do here at RPI. On the left we start with our planning phase. After the project kicks off we have our project managers, project coordinators meet with the client team to figure out what the timeline looks like, what are the requirements, what was in scope, what was out of scope, to plan the engagement for the rest of the project lifecycle.

Then we go into a design phase. The design phase is where one of our consultants will go through and sit down with your subject matter experts, the stakeholders and figure out what those business requirements are, get all of that documented on paper before we move into development and implementation. Once that’s finished we go into our formal testing cycle and validation. Once that’s all approved, then we can move into a cut over and then go through our go live event.

What we have listed here are some of the main testing cycles that we wanted to call out in this presentation. The very first one is our unit testing. After the requirements are gathered during design, we start our development, so whether that’s writing a new script or configuring workflow queues within your solution, whatever that might be. Unit testing is being performed pretty much internally. It’s something that our consultants and developers do while we get everything in place to make sure it meets those requirements from design.

Then once we’re comfortable that everything is built out and we’re ready to move on to the next step, we go into a system integration testing. This is where we introduce more of your power users, your system admins, get them up to speed on everything that we’ve been building so far, and it allows us to have our first opportunity to do an end-to-end test with the solution at hand.

Once that’s all finalized and everyone’s up to speed including the core project team, we move into our UAT, so the user acceptance testing. In this phase we broaden the scope of the users who are involved, extending that to the end users and more of the power users out there to make sure that the solution that we’ve been working on, that we’ve been developing works within their real-life scenarios. Once UAT is finished then of course we go into go live and that’s where we get our green flag that says everything that we’ve done so far works, and that we’re ready to take this live.

Really quickly, some other testing concepts you guys might have heard of. Regression testing, this is really just testing previous functionality just to make sure what we’re introducing didn’t break any previous functionality, so your other workflows. Hopefully none of that’s being affected, but we do all that through regression testing. Smoke testing. This is a term used for any preliminary testing. It’s very accelerated.

It’s something that we do just to see feedback very quickly, so we know do we need to keep pursuing this process or is this something that we can just drop because it’s not going to work functionally.

Then the last one here is non-functional testing. Non-functional testing is the idea of testing indirect components, so while we might be writing a specific script to do something, we want to make sure that this isn’t going to break something else within the system that’s not really related. Those are items such as performance testing or stress testing.

Sean LaBonte:

Now, we’re going to take a look at the three most common testing stages that Pitts had outlined on the infographic: unit testing, SIT, and UAT. We’re going to take a look at each of their objectives, the scope of the work, and the typical best practices involved. Unit testing, this is an isolated testing of individual components during development and configuration of an application.

One example might be that in unit testing could be a developer testing a single function, a few lines of code, how it behaves, or if it conforms to business requirements, or possibly a consultant testing that a brand new queue in ImageNow is functioning as expected. Really your objectives here are to start the development and logging of test scripts and test cases, perform testing in an iterative fashion where it makes sense, along with regression testing, and of course validate the design.

Now, there could be cases where you find holes or gaps, so this is definitely where you want to identify those as this is your lowest costing stage of testing involved. Next up would be system integration testing or just plain integration testing. This is the overall testing of all components and how they work together, or with external dependencies that make up an entire solution.

An example would be testing your application’s ability to pull in data from the cloud, or testing if you were pulling in metadata from Brainware to ImageNow. This is your first time that you get to really go through end-to-end testing. In SIT we bring in Superusers, power users. We begin the process of knowledge transfer, and moving ownership of the application to the client. Your exit criteria here for the stage is really making sure that you have full documentation of your solution and making sure all high-priority ticket items are taken care of and cleared on your testing plan.

Pitts:

Perfect. The last one here is user acceptance testing. Again, this is UAT. This is considered the final phase of testing before everything goes live. The idea is that we’ve expanded the scope to the furthest that we’ll be doing for our testing efforts, where we bring in more of the end users and the power users letting them validate all their real-world scenarios, and then really to assess the operational readiness. Are we ready to take this live? Does this meet all the criteria that we’ve been looking for?

This is a more formal approach to testing. There’s typically a kickoff. There’s documentation. There’s a mini-timeline for UAT, how many rounds are we going through, what needs to be addressed. Then it’s also more of a curated approach. Here we would produce documentation such as test cases or test scripts to walk those end users through what we’re trying to test, and then making sure all of the testing scenarios are accounted for here.

At the end of UAT we would go through a sign off process where we meet with everybody who’s involved in testing. If everyone gives us the green light then we’re ready to move on to cut over and then go live. Again, this is probably the most important testing cycle, just because it ensures all those business functions are operating as expected, and that we have included a majority of the users into the scope of the testing.

Sean LaBonte:

Now we’re going to go over the testing stages but from the perspective of the management side. First off it all starts off with planning. You’re going to start off by developing your test plan. This is not the same as developing test cases. This is a breakdown of your overall testing in a logistical, strategic, and script sense. You’re determining your roles, your communication plans, your test cases. This all goes into it.

It’s your overall practice and execution of testing from beginning to end. You got to start scheduling early, work with your business teams, tech team, dev, QA. You need to determine if more hardware is needed for testing, what users go in what roles for testing, so definitely start that as early as possible.

Once you have all this set up and determines, you can start off with a formal kickoff call for UAT. You’re going to define your purpose, build a mindset and testing strategy for end users so that they know what to look for and how to properly log their outcomes. You’re going to set your communication plan in action, and describe sign off for each case.

Of course, this takes a lot of encompassing and planning, so you’re going to need to make sure everything’s highly visible, and you’re going to need tools and resources to do so. Some of those might be for issue management, for instance. Here at RPI we highly, highly recommend using Smartsheet. We use that for all of our cases, all of our plans. For instance, you might need to make sure your test cases are highly visible. You’re going to need to have your business requirements or responsibilities for sign off, your impact levels, names, IDs, pass and fails all highly visible and all highly accessible.

From those fails on your test cases, you’re going to have the need for an issue log. You’re going to want that visible as well. This is where you’re going to determine the name of the issue, descriptions, progress, level of effort, timeframe, responsibility, and then you’re going to need to classify each item.

As you go through UAT, you’re going to determine either bugs or really new items, so you need to determine if it is in or out of scope, give it a priority. Typically in a waterfall situation and methodology, you’re going to want to determine out of scope items need to be post-go-live enhancements. If you have agile, of course, the project management team can determine if a sprint is necessary in your UAT sessions.

At some times show stoppers happen, of course. You need to analyze their impact. If you do determine that a show stopper has made its way through unit testing, through SIT, and has come up now, you need to assign your best resources to it for the quickest fix possible. Have them recommend how to test, and analyze again for future use.

Pitts:

Perfect. We also have test scripts, test cases, and test scenarios. Now these terms are used widely throughout the industry. They can all mean different things. We’ve taken a step here and tried to define a lot of these items. With test scripts, those are really a set of instructions given to these end users so that they’re able to test the system as we’d like them to.

The idea here is that if you have an end user who has no context to the product or the solution at hand, you should be able to hand over a test script that walks them through exactly what they need to be testing. This is as granular as logging into the system, clicking this button, clicking this button, and then what is supposed to happen when we do those actions.

A test case is more of an idea, so we want to test a specific idea. An example of that is if we’re testing to see if a scanning will go into a specific queue. The test case is we’re going to make sure that we scan these patient-level documents and that these are for sure going to drop into a queue, as opposed to routing all the way through the workflow automatically.

Then the last one here, a testing scenario. This is more an objective. With the objective of testing scenarios we want to make sure we identify all potential scenarios to be as thorough as possible. In that example we can be testing patient-level documents, we can be testing counter-level documents, or account-level documents. We want to make sure that we’re testing all of these as much as we can.

Even more granular than that we can test patient-level documents where we’re scanning, or patient-level documents where we’re doing an import or a cold feed, or even patient-level documents where we’re retrieving from email or fax as well. All of those would be different scenarios that we want to make sure we include just so we’re thorough with our testing efforts.

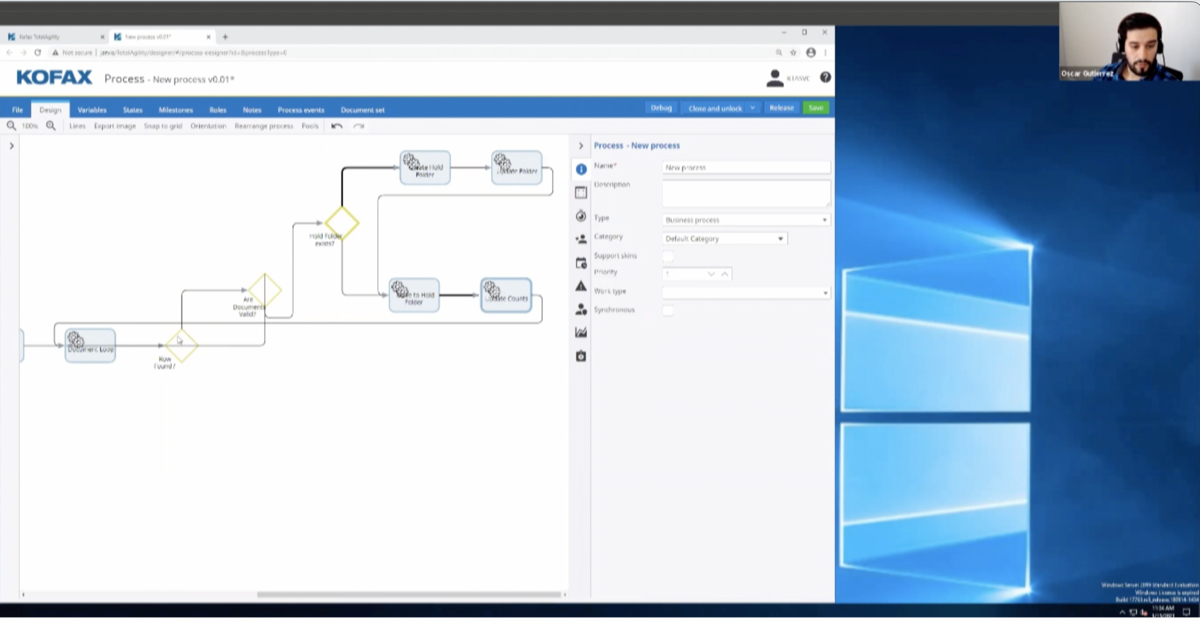

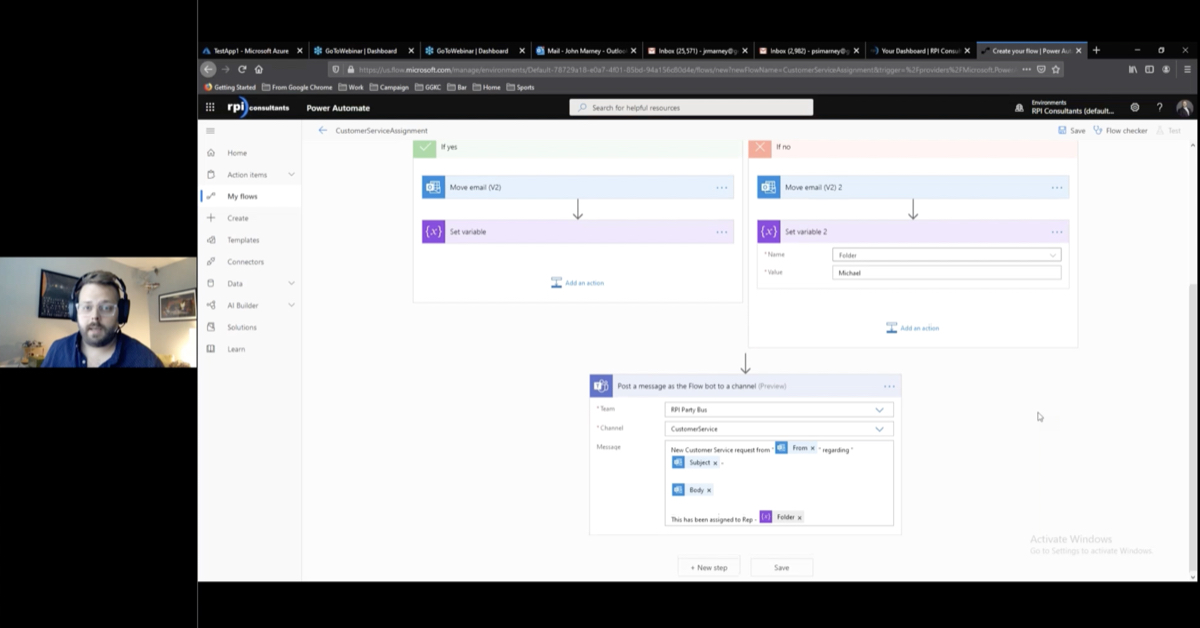

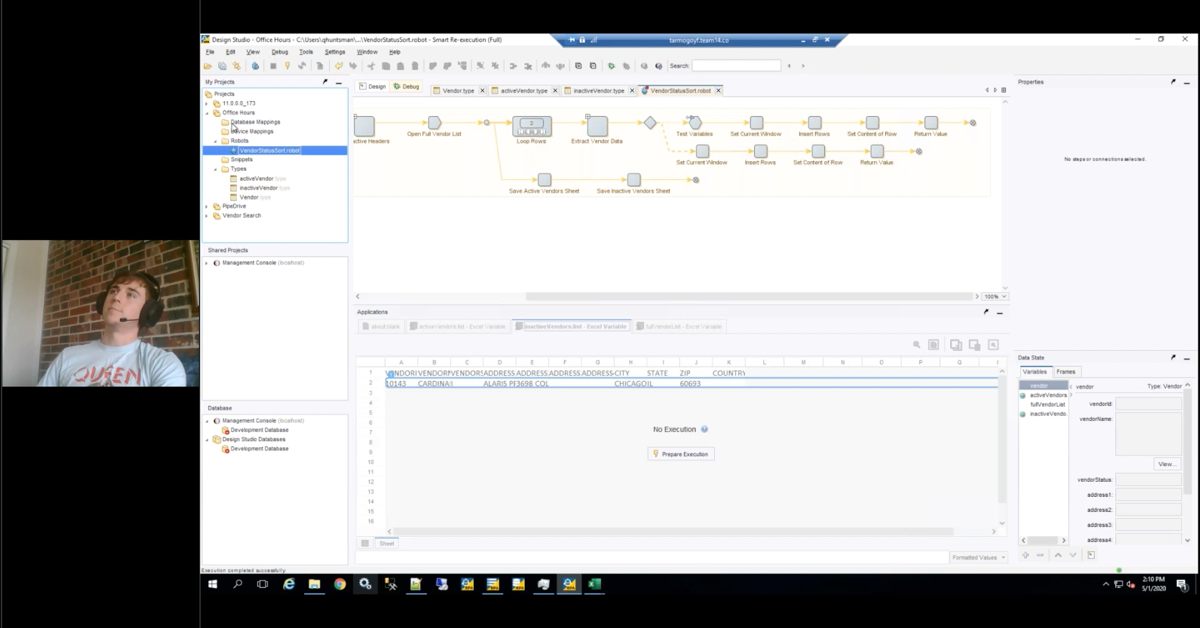

With test cases and test scripts, this is just an example we have up here on the screen. This is a test script that I use frequently. This is for an HIM scenario, so a medical records department. When we build an HIM solution, we want to make sure we account for multiple scenarios here. This is typically done within an Excel spreadsheet just because if offers us a little bit more flexibility as far as formatting of the items.

What you have here with each line item is a specific test case or a test script. This tells you the functionality that we’re trying to test. When you select on one of the line items it’ll actually move you forward into a specific tab on that spreadsheet. This goes into more of the test script, so the more of the case-by-case. This is exactly what we’re trying to do. The items are prep a batch of documents, make sure it has this many pages, and this many separator sheets or barcodes. Then we’re going to scan those in.

After each step there’s an expected results column, and then the end users could then fill in did this pass, did this fail, this is what I saw, and then that reports back to the main page. The idea here is that we’re curating this approach, so the end users are walking through all the steps that we’ve identified in order to perform this test.

Then the last thing here, testing cycles and scalability. Often with UAT it’s performed in multiple rounds. This allows for consistent testing, and then back and forth and updates as we need to. Typically the way I see this done is through break and fix weeks. Within the break week this is typically the very first week after we kickoff with UAT. We’re all out of the system. There are no code changes, no configuration changes. The end users are in the system testing it.

They’re working through all their test scripts. If issues come up, they log those issues. They let us know. No changes are being made until the end of the week. Typically at the end of the week we’ll do a recap as a group to figure out what went wrong, what are the big issues, prioritize everything. Then the following week is a fix week. During the fix week the users are out of the system, no testing is being done, and we’re addressing all the bugs and issues that have come up.

This is iterative. We can do multiple rounds. A larger medical records solution might do four or five rounds of break fix, so that’s eight or ten weeks of testing altogether for UAT. We go through these rounds and then they’re more of an iterative approach just so that we can eliminate those issues as they come up. The idea is that when the last round is completed and everybody’s comfortable, we ask for UAT sign off, and then we can move into our cut over step.

Testing is definitely not a one-size-fits-all, but the goal really is to get to a point where everyone is comfortable going live. If the project is a lot smaller and we need more of an accelerated approach, I’d say for sure we can skip a lot of those items. Again, the point is to make sure we’re all comfortable so that we’re going live and that the go live is going to be as smooth as possible, minimizing a lot of the issues once we’re live.

There’s a lot of tools out there to help with this process. Sean mentioned SmartSheets. We use a lot of SmartSheets here at RPI. There’s even tools out there to help automate the process for testing. Towards the start of the process where we do our unit testing for development, there are tools out there that we could help run through all these test cases that we develop as well to help automate and speed up that process.

That’s all we had for testing today. There is a moderator standing by for questions, so feel free to key those into the GoToWebinar box. We’re going to take questions.

Speaker 3:

We do have a couple. The first is if I have a project with a very fast timeline, what is the bare minimum or what kind of testing should I be performing?

Pitts:

Like I said, the main goal is to help go live go smoothly. Assuming all the requirements are gathered accurately and everything, we should be able to develop and build on that and walk all the way through to the end. If we were to skip a lot of those testing efforts, I think the most important testing cycle is UAT. That user acceptance testing at the end, it doesn’t have to be eight to ten weeks. It could just be one round if that’s all that’s needed, depending on the complexity of the solution at hand.

UAT is important because you are introducing end users. You’re introducing the scope. Everything up to that point has been maybe two or three users looking at the system, looking at the solution, testing it. If we expand the scope to more of the end users and the power users, there’s something that we might have missed as well. Again, it’s not a bulletproof way to make sure go live goes super-smoothly, but I think it’s probably the most vital component if you are working with an accelerated timeline.

Speaker 3:

Thank you. Two related questions. What tools do you find effective in managing testing and reporting results, and what is Smartsheet?

Sean LaBonte:

Definitely you can utilize Google Sheets, Excel, any kind of spreadsheet just like Pitts had up here, had a great example for it all. We use SmartSheets primarily because of the simplicity of it just being web hosted, so users have access to it. It can provide a lot more in-depth analytics, and you can provide everyone a dashboard as well.

Pitts:

The visibility part of it is pretty big as well. We were working with a client out in the UK, so our timelines were different. They would be working at night, and then I’d come in in the morning, check SmartSheets, look at all those issues that they’ve logged, address them during the day, and then when they get into the office at night everything will be updated as well. It’s like a break fix scenario, but instead of weeks at a time it was just a couple of hours.

SmartSheets was very helpful in that situation, because we all have a central place where we could be logging our issues, logging the updates. If they had issues, they could attach screenshots as well to those tickets. I could address the tickets. Let them know that this has now moved into a ready for testing stage, so we have different statuses as well. The visibility in the tool is very, very powerful.

Speaker 3:

What’s SmartSheets?

Sean LaBonte:

We could cover that definitely. SmartSheets is a web hosted application where really if you’ve ever worked with us, you can see that we host project schedules, plans, test cases, cut over plans for all of our projects. Again, for the high visibility and everything. You can build out just about whatever you want from it, whether it be actual reporting tools, just pure analytics and numbers on their dashboard, or just going back to what we were talking about, really it’s an Excel sheet on steroids.

Pitts:

It’s pretty powerful. We do our project plans in there, issue management all in the SmartSheet as well. Like Sean mentioned, we can track hours in there as well, build dashboards as we need to. It’s just our tool of choice really for project management items.

Speaker 3:

It’s collaborative and people can access it remotely.

Sean LaBonte:

It’s collaborative and people can access it remotely as needed.

Pitts:

For sure.

Speaker 3:

Another question is, is there any software or a systematic approach to perform regression testing for workflow functionality? This is probably specific to ImageNow.

Pitts:

Not that I’m really aware of. I think typically when we go into design we want to document as much of this as possible, so when we’re introducing a new solution we want to be cognizant of all the other solutions that might be affected as well. When we go through our unit testing, we do to a certain extent go through our own regression testing. Those would really just be targeting pieces of functionality that we know might be affected.

When I go through UAT and create those test cases, a lot of my test cases are regression test cases, so we want to make sure if I’m introducing a new method of scanning for this particular workflow, I want to make sure all the other methods still work the same way, the workflow still works the same way, all the scripts are firing as normal. The idea is we want to make sure we encompass a lot of those testing scenarios, whether they’re directly related to the changes at hand or if they’re specifically regression testing scenarios.

That’s how we incorporate regression testing into this. There’s not an easy automated way of doing that. It’s more of just we have to be aware of what we’re changing, and then incorporating that into our testing efforts as well.

Sean LaBonte:

It’s a manual process for sure.

Speaker 3:

I would say there’s a couple of things that can help inform your test cases there. The first being having proper reporting in place prior to starting to be sure that you know what’s coming in and out of your workflow. The other being the … Well, I forgot but there was another thing. I’ll circle back on that. Next question, does the proposed approach or does your proposed approach change for an upgrade versus a new project or an enhancement?

Pitts:

Definitely. Those are the three main cycles. We talked about unit testing, talked about integration testing, and then talked about UAT. Typically with an upgrade we would do something similar, but with the upgrade our unit testing is once we install the new server and the new clients we want to make sure we can still connect, make sure those pieces still work the way they’re supposed to work.

Then when we do integration testing we bring in those end users, let them know that they’re testing, and they probably have specific pieces in mind that they wanted to test with, so more complicated pieces of their solution. Once we do an upgrade, it’s a big deal to switch out the server. We want to make sure the functionality still works there and then we definitely go into UAT.

With upgrades the UAT is a little more different, because we have to test everything. There’s really not specific components that we’re looking to change, because everything changed at this point. UAT really depends on the project, depends on your timeline, how many resources or how many hours and weeks you want to dedicate to the UAT efforts here. It’s all going to differ based on the project at hand.

If it’s something as simple as a new iScript you needed, so maybe one or two queues and an iScript, this could all be done within a matter of weeks, like two or three weeks as opposed to a full-on month cycle or something like that. It just depends on the project at hand. Of course, every time we go through an engagement like this, we’ll sit down with you and walk you through what we think is appropriate for testing, what might be overkill, what might be not enough, so that we need to make sure that we make that time for the testing.

As I said, testing is a pretty important part because it’s going to allow us to have a smoother go live and cut over, and then transition on to your end users as well.

Speaker 3:

The other thing especially in an enhancement project, and this ties into the second point I was going to make, proper documentation of your current state so that you know, so that everybody knows what all needs to be tested is important.

Pitts:

Exactly.

Speaker 3:

I believe those were all the questions we had, so thanks guys.

Pitts:

Perfect. Just as a reminder, the slide deck will be sent out to all our attendees. This webinar is recorded. It’ll be posted on our website. We do have additional resources here. We have a quick knowledge base that we spun up with a lot of frequently asked questions, a lot of topics that we like to post on there, and then all of the previous webinars can be found at webinars.

Thank you.

Want More Content?

Sign up and get access to all our new Knowledge Base content, including new and upcoming Webinars, Virtual User Groups, Product Demos, White Papers, & Case Studies.

Entire Knowledge Base

All Products, Solutions, & Professional Services

Contact Us to Get Started

Don’t Just Take Our Word for it!

See What Our Clients Have to Say

Denver Health

“RPI brought in senior people that our folks related to and were able to work with easily. Their folks have been approachable, they listen to us, and they have been responsive to our questions – and when we see things we want to do a little differently, they have listened and figured out how to make it happen. “

Keith Thompson

Director of ERP Applications

Atlanta Public Schools

“Prior to RPI, we were really struggling with our HR technology. They brought in expertise to provide solutions to business problems, thought leadership for our long term strategic planning, and they help us make sure we are implementing new initiatives in an order that doesn’t create problems in the future. RPI has been a God-send. “

Skye Duckett

Chief Human Resources Officer

Nuvance Health

“We knew our Accounts Payable processes were unsustainable for our planned growth and RPI Consultants offered a blueprint for automating our most time-intensive workflow – invoice processing.”

Miles McIvor

Accounting Systems Manager

San Diego State University

“Our favorite outcome of the solution is the automation, which enables us to provide better service to our customers. Also, our consultant, Michael Madsen, was knowledgeable, easy to work with, patient, dependable and flexible with his schedule.”

Catherine Love

Associate Human Resources Director

Bon Secours Health System

“RPI has more than just knowledge, their consultants are personable leaders who will drive more efficient solutions. They challenged us to think outside the box and to believe that we could design a best-practice solution with minimal ongoing costs.”

Joel Stafford

Director of Accounts Payable

Lippert Components

“We understood we required a robust, customized solution. RPI not only had the product expertise, they listened to our needs to make sure the project was a success.”

Chris Tozier

Director of Information Technology

Bassett Medical Center

“Overall the project went really well, I’m very pleased with the outcome. I don’t think having any other consulting team on the project would have been able to provide us as much knowledge as RPI has been able to. “

Sue Pokorny

Manager of HRIS & Compensation

MD National Capital Park & Planning Commission

“Working with Anne Bwogi [RPI Project Manager] is fun. She keeps us grounded and makes sure we are thoroughly engaged. We have a name for her – the Annetrack. The Annetrack is on schedule so you better get on board.”

Derek Morgan

ERP Business Analyst

Aspirus

“Our relationship with RPI is great, they are like an extension of the Aspirus team. When we have a question, we reach out to them and get answers right away. If we have a big project, we bounce it off them immediately to get their ideas and ask for their expertise.”

Jen Underwood

Director of Supply Chain Informatics and Systems

Our People are the Difference

And Our Culture is Our Greatest Asset

A lot of people say it, we really mean it. We recruit good people. People who are great at what they do and fun to work with. We look for diverse strengths and abilities, a passion for excellent client service, and an entrepreneurial drive to get the job done.

We also practice what we preach and use the industry’s leading software to help manage our projects, engage with our client project teams, and enable our team to stay connected and collaborate. This open, team-based approach gives each customer and project the cumulative value of our entire team’s knowledge and experience.

The RPI Consultants Blog

News, Announcements, Celebrations, & Upcoming Events

News & Announcements

3 Key Insights from the 2024 Infor Velocity Summit

Chris Arey2024-10-21T18:48:08+00:00October 15th, 2024|Blog|

Healthcare Supply Chain Insights from AHRMM 2024

Chris Arey2024-10-06T16:42:23+00:00October 1st, 2024|Blog|

ERP Security: Issues to Consider & Best Practices to Follow

Chris Arey2024-09-21T10:00:15+00:00September 17th, 2024|Blog|

Open Enrollment 2025: Top 5 Tasks for Employers

Chris Arey2024-09-11T19:03:25+00:00September 3rd, 2024|Blog|

ERP Use Cases: The Top 5 for Local Governments

Chris Arey2024-08-22T19:32:29+00:00August 20th, 2024|Blog|

High Fives & Go Lives

AP Health Check at Jeffries Creates Path for Increased Efficiency, Visibility

Michael Hopkins2024-02-26T13:51:02+00:00November 30th, 2020|Blog, Brainware, High Fives & Go-Lives, Perceptive Content / ImageNow|

Customer Voices: Derek Morgan, MNCPPC

RPI Consultants2020-12-16T17:50:32+00:00August 14th, 2020|Blog, High Fives & Go-Lives, Infor CloudSuite & Lawson|

Voice of the Community: Jen Underwood, Aspirus

RPI Consultants2024-02-26T06:04:23+00:00March 14th, 2020|Blog, High Fives & Go-Lives, Infor CloudSuite & Lawson|

Voice of the Community: Keith, Denver Health

RPI Consultants2024-02-26T06:01:19+00:00March 14th, 2020|Blog, High Fives & Go-Lives, Infor CloudSuite & Lawson|

AP Automation Case Study at Nuvance Health

Michael Hopkins2024-02-26T13:48:07+00:00March 4th, 2020|Blog, High Fives & Go-Lives, Infor CloudSuite & Lawson, Knowledge Base, Kofax Intelligent Automation, Other Products & Solutions, Perceptive Content / ImageNow|

Upcoming Events

RPI Client Reception at CommunityLIVE 2019

RPI Consultants2024-02-26T06:09:32+00:00June 20th, 2019|Blog, Virtual Events, User Groups, & Conferences|

Free Two-Day Kofax RPA Workshop (Limited Availability)

RPI Consultants2024-02-26T13:24:38+00:00June 13th, 2019|Blog, Virtual Events, User Groups, & Conferences|

POSTPONED: Power Your Logistics Processes with a Digital Workforce with Kofax

RPI Consultants2024-02-26T13:29:29+00:00May 29th, 2019|Blog, Virtual Events, User Groups, & Conferences|

You’re Invited: Customer Appreciation Happy Hour

RPI Consultants2024-02-26T06:27:45+00:00March 14th, 2019|Blog, Virtual Events, User Groups, & Conferences|

RPI Consultants Sponsors 2019 Michigan Manufacturing Operations Conference

RPI Consultants2024-02-26T13:53:21+00:00January 30th, 2019|Blog, Virtual Events, User Groups, & Conferences|